Years ago, I wrote Watch-DbaDbLogin which keeps an inventory of accounts, hosts and programs that log into a SQL Server. It was pretty crude, but helped immensely during my migration, as this inventory ensured that my documentation was in order and no unexpected downtime would occur.

I found that about 80-90% of logins/applications were covered within 48-hours, but two months of data gave me total confidence.

I always wanted to update the command, though I’m not sure Watch-DbaDbLogin is still within the scope of the module. It’ll likely remove it in dbatools 1.0 so please accept this far cooler post in its place.

There are several ways to capture logins, all with their own pros and cons. In this post, we’ll outline four possibilities: default trace, audits, extended events and session enumeration.

Note: The code in this post requires dbatools version 0.9.323. I found two bugs while testing sample scenarios 😬 Also, this post addresses tracking logins for migration purposes, not for security purposes. Edit the where clauses as suitable for your environment.

Using a default trace

Using the default trace is pretty lightweight and backwards compatible. While I generally try to avoid traces, I like this method because it doesn’t require remote access, it works on older SQL instances, it’s accurate and reading from the trace isn’t as CPU-intensive as it would be with an Extended Event.

Setup the SQL table

Basically, no matter which way you track your logins, you’ll need to store them somewhere. Below is some T-SQL which sets up a table that is ideal for bulk importing (which we’ll do using Write-DbaDataTable).

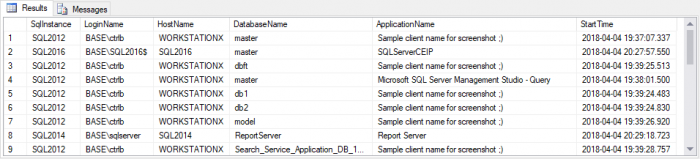

The table is created with an index that ignores duplicate sessions. When IGNORE_DUP_KEY is ON, a duplicate row is simply ignored. So we’re going to setup a clustered index using SqlInstance, LoginName, HostName, DatabaseName, ApplicationName and StartTime. Then the collector will send a bunch of rows via bulkcopy to the table, and the table will ignore the dupes.

To clarify, “duplicate” logins may show up, but not duplicate sessions. Watch-DbaDbLogin only recorded the first time it ever saw a login/db/host/app combination which many people found to be less useful, especially if you run the login tracker for years. What if a login became stale?

If you’d like the first login only, remove StartTime ASC from the index.

Setup the default trace

Setup the collector

Next, you’ll want to setup a collector as a scheduled SQL Agent Job.

How often should you run the job? It depends. I have one server that has login information going back to November. But I’ve found that SharePoint or System Center dedicated instances only have about 20 minutes worth of login data in the default trace.

How long does the collection take? Polling 15 servers took 14 seconds to read 55,000 records and 18 seconds to write that data. Of the 55,000 records, only 115 were unique!

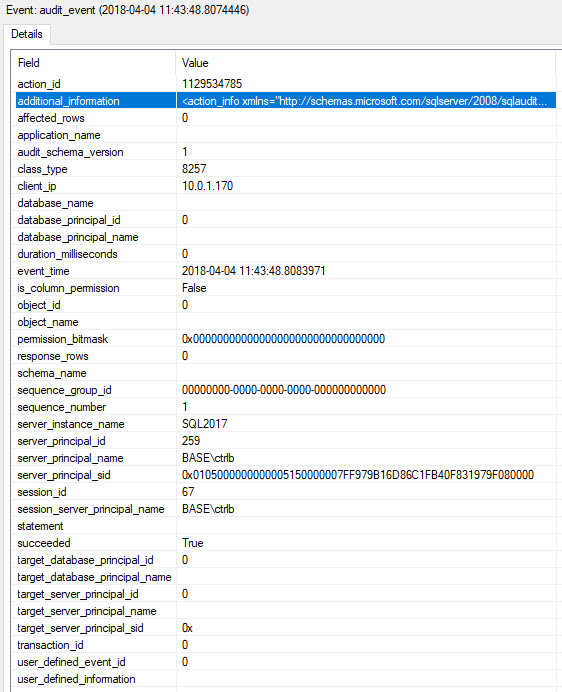

Using a SQL Server Audit

Audits are cool because audits can “force the instance of SQL Server to shut down, if SQL Server fails to write data to the audit target for any reason”. This ensures that 100% of your logins are captured. But my requirements for collecting migration information aren’t that high and I haven’t found the magical Audit Spec that only logs what I need. Here’s what the .sqlaudit file for SUCCESSFUL_LOGIN_GROUP looks like when you rename it to .xel and open it.

Eh, I’m missing so much stuff. And since Audits are Extended Events anyway, and I have more control over what I do and don’t want to see, we’ll skip right to Extended Events.

Using Extended Events

You can also use Extended Events. This option is pretty cool but collecting the data does require UNC access for remote servers.

Setup the SQL table

Login Tracker template

We’ve provided a “Login Tracker” Extended Event session template that you can easily add to your estate.

This template creates a session that:

- Is initiated by sql_statement_starting event

- Collects the minimum possible columns

- Ignores connections from dbatools and SSMS

- Ignores queries to tempdb

- Ignores system queries

- Keeps 50 MB of data on disk (10×5)

I chose sql_statement_starting because it’s the only one that I found that actually included the database name. If this doesn’t work for you, you can modify then export/import the modified Session. If you have a better suggestion, I’d love that. Please let me know; I kinda feel like this one is overkill.

Setup the XESession

Setup the collector

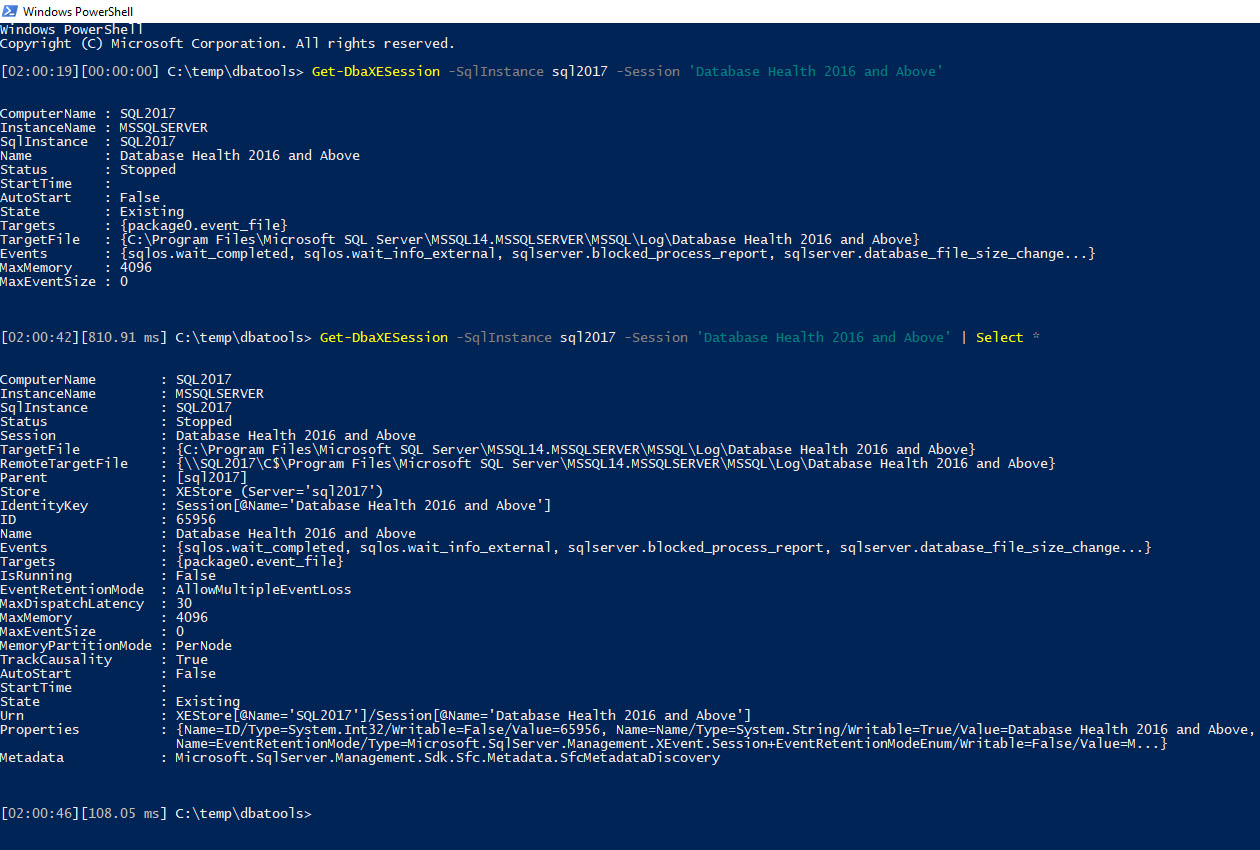

UNC access

So instead of placing the burden of XML shredding on the CPU of the destination SQL instance, Read-DbaXEFile uses the local resources. It does this by using the RemoteTargetFile which is available in Get-DbaXESession but is not a default field. To unhide non-default fields, pipe to SELECT *.

Keep in mind that the entire file is read each time you enumerate. Which is not a big deal, but should be considered if you have millions of logins.

Note that I did set a max on the Login Tracker file size to 50 MB so if you want to modify that, you can use PowerShell or SSMS (Instance ➡ Management ➡ Extended Events ➡ Sessions ➡ Login Tracker ➡ right-click Properties ➡ Data Storage ➡ Remove/Add). There is no dbatools command available to do this in PowerShell yet, so you’ll have to do it manually until it’s added.

Using session enumeration

This one requires no setup at all, but only captures whoever is logged in at the time that you run the command. This approach is what I originally used in Watch-DbaDbLogin (scheduled to run every 5 minutes) and it worked quite well.

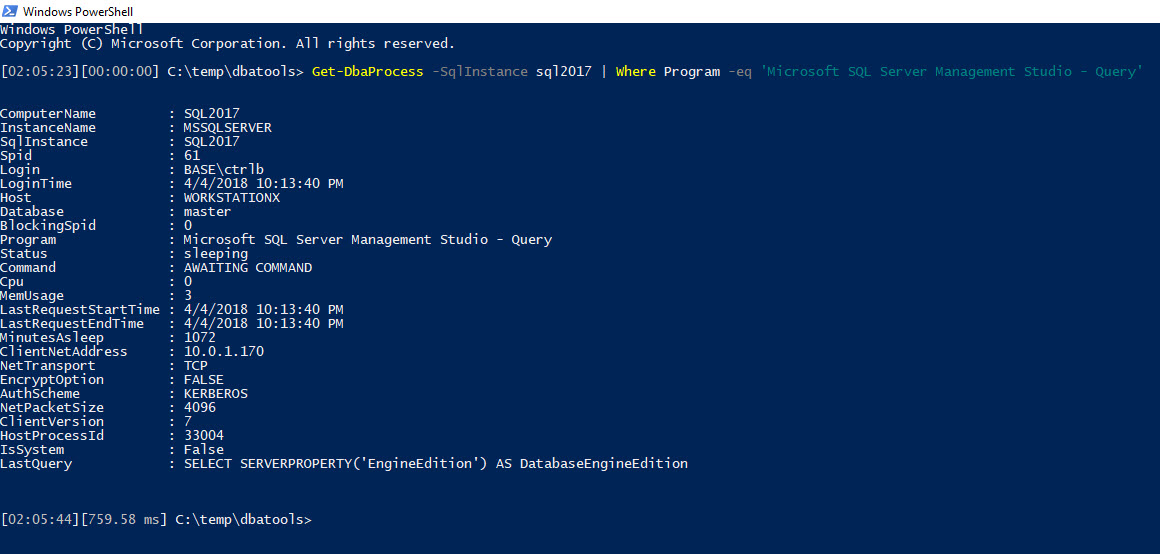

So if you’ve never seen the output for Get-DbaProcess, which does session enumeration, it’s pretty useful. If you’d like something even more lightweight that still gives you most of the information you need, you can use $server.EnumProcesses()

Actually, scratch all that. Let’s go with some lightweight, backwards-compatible T-SQL that gets us only what we need and nothing more. Honestly, of all the ways, I’ve personally defaulted back to this one. It’s just so succinct and efficient. There is the possibility that I’ll miss a login, but this isn’t a security audit and really, I inventoried 100% of the logins I needed for my last migration.

Setup the SQL table

Setup the collector

Testing your results

If you’re testing the scripts on a non-busy system like I did, you may not get any results back because we’re ignoring connections from dbatools and SQL Server Management Studio.

If you’d like to ensure some results, just run this before performing a collection. This connects to SQL Server using a fake client name and performs a query that gathers database names.

Scheduling

To schedule the collection, you can use my favorite method, SQL Server Agent. I wrote about this in-depth in a post, Scheduling PowerShell Tasks with SQL Agent.

During my own migration, I used session enumeration and setup the collector to run every 5 minutes. With Traces or Extended Events, you can collect the logins far less frequently since they are stored on the remote server.

Hope this was helpful!

Chrissy

Great article Chrissy. I recently went through a similar exercise and found SQL Audit lacking as well. We settled on XE, same, little over the top but gets the work done efficiently. Didn’t know about Read-DbaXEFile, flashed out for more morning trading.

Cool, Hermann! Did you use which Event did you end up using? sql_statements_starting?

We have a lot of new (since Jan) XE stuff – check out dbatools.io/xevents

Pingback: Collecting Login Details With dbatools – Curated SQL

I am trying to run the collector session-insert.ps1 script for extended events and I am getting the following error. I updated dbatools to latest. I am on sql 2017 on a localhost using all the standard scripts you provided. The first command executes without error but the second command fails. Everything worked until than. Any ideas? Thanks

Write-DbaDataTable : Cannot validate argument on parameter ‘InputObject’. The argument is null. Provide a valid value for the argument, and then try running the command again.

At line:6 char:33

+ Write-DbaDataTable -InputObject $results -SqlInstance localhost -Data …

+ ~~~~~~~~

+ CategoryInfo : InvalidData: (:) [Write-DbaDataTable], ParameterBindingValidationException

+ FullyQualifiedErrorId : ParameterArgumentValidationError,Write-DbaDataTable

Hey Greg, that’s because your result set is null. In my own script I assign the collection to a variable then check to see if the variable is null: if ($results) { write-dbadatatable -inputobject $results).

I understand it is null, i guess my question is, using the script, why is it null? I can see data in the session in SSMS so there is data. It also appears you use the function ‘Read-DbaXEFile’ but this function is no longer in dbatools. (It is not listed in the command index) I am starting to think that is why the variable is null.

Thank you

The command index is out of date, the command does exist. You can see all our commands using: Find-DbaCommand.

Did you add any wheres and did you ensure the Login Tracker session is running?

#ensure you have servers assigned

$servers

#ensure your login tracker is running

$servers | Get-DbaXESession -Session ‘Login Tracker’

I got it working, here is what I found.

The $Servers is not working – I ran this before the script above and that is when it failed (not this ype, this created the null issue): $servers = Get-DbaRegisteredServer -SqlInstance localhost

So Something with that is not working. Still not sure.I ran the following and it returned nothing (but I am newish to powershell so this may not be right)

$servers = Get-DbaRegisteredServer -SqlInstance localhost

write-output $servers

I then ran the next command but i took out the ‘$servers |’ and ran without, it prompted me for the instance, i typed it in and everything worked. So I am not sure what the issue with registering the server is. It is a SQL Server 2017 if that makes any difference. Here is what worked.

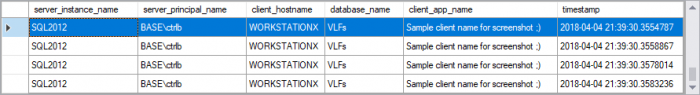

# Collect the results into a variable so that the bulk import is supafast

$results = Get-DbaXESession -Session ‘Login Tracker’ | Read-DbaXEFile |

Select-Object server_instance_name, server_principal_name, client_hostname, database_name, client_app_name, timestamp

# Bulk import to the centralized database in an efficient manner (piping would write line by line)

Write-DbaDataTable -InputObject $results -SqlInstance localhost -Database inventory -Table watchlogins

Thank you for your help

Hi Chrissy,

Great article

I wanted to use the Default Trace to get this information but something is bothering me. Default trace does not capture Login or Logout event id (14,15). This means that it does not garanty all connections will be captured. I tried connecting and running a simple query the close the connection. It was not in the trace. It’s worth warning the reader. They must be aware before taking migration decision based on incomplete data.

Could you, please, explain to me why you do not audit anything comeing from ssms?

not like ‘Microsoft SQL Server Management Studio%’

Thank you!

Luke

Hey Luke, the auditing is primarily intended to ensure that all supported applications are known and considered/planned for when performing the migration. When SSMS connects to a server, it’s more of an ad-hoc behavior that likely does not need need to be managed. If you would like to audit for purposes other than migrations or if your end-users are connecting via ssms, then you can remove the filter 👍

Hi I have a similar required to capture – username, login time, logout time. can you please share me scripts to do the same. thank you.