For the longest time, I managed these aliases using cliconfg for 64-bit applications (C:\Windows\System32\cliconfg.exe) or cliconfg for 32-bit applications (C:\Windows\SysWOW64\cliconfg.exe).

When I’d remember and if it was available, I’d also manage SQL Client Aliases using SQL Server Configuration Manager, which surfaces aliases for both 32-bit applications and 64-bit applications in a single pane. Here’s a screenshot of both SQL Server Configuration Manager and cliconfg. Note they both show the same aliases, confirming that you can manage aliases using whichever you prefer.

SQL Native Client provides cliconfg.exe but I think it’s also built into Windows. I’ve yet to find an OS that doesn’t have cliconfg on it – and I tried all the way back to Windows 2003! So if SQL Client Aliases seem useful to you, you’re in luck.

While you can manage SQL Client Aliases using the GUI, I prefer using dbatools which helps me avoid logging into multiple servers at once, and creates both the 32-bit and 64-bit aliases at once.

using dbatools

I find SQL Client Aliases most useful for facilitating easy migrations and using them is even recommended as a best practice in the SharePoint world.

First, I check all of my servers to see which aliases are currently setup.

Get-DbaClientAlias -ComputerName web01, web02, web03

Then, I’ll either remove old ones using something like this, which allows me to select the aliases I want to remove.

Get-DbaClientAlias -ComputerName web01, web02, web03 | Out-GridView -Passthru | Remove-DbaClientAlias

Then I create my aliases as required. Note that the aliases and server names can be formatted in a number of ways. Both named instances and specifying a port numbers is supported.

New-DbaClientAlias -ComputerName web01, web02, web03 -ServerName sql02 -Alias sql01

New-DbaClientAlias -ComputerName web01, web02, web03 -ServerName sql02\sqlexpress19 -Alias sql01\sqlexpress14

New-DbaClientAlias -ComputerName web01, web02, web03 -ServerName 'sql02,2383' -Alias 'sql01,2383'

By default, connections are created as TCP/IP. Want to use named pipes? That’s also possible.

New-DbaClientAlias -ServerName sqlcluster\sharepoint -Alias sp -Protocol NamedPipes

If you’re familiar with named pipe aliases, you may remember that it creates a funky string. Don’t worry, we take care of that for you. Just pass the ServerName and Alias as you would with TCP/IP and we’ll build the named pipe string for you.

Now that I’ve created all of my required aliases, let’s take a look at them using Get-DbaClientAlias and Out-GridView. Note that when I don’t specify -ComputerName, the command executes against my local machine, in this case, WORKSTATIONX.

try it out

Test this out yourself – create a new alias, then use dbatools, Azure Data Studio or SSMS to connect to the new server using the old name. In the example below, I’ve migrated sql2014 to sql2016 then will use the sql2014 alias to connect.

Automating your migrations just got even easier! For more information, check out our built-in help using Get-Help Get-DbaClientAlias -Detailed.

- Chrissy

The complete listing of all sessions can be found at sqlbits.com/information/publicsessions. Here are sessions that feature dbatools or PowerShell.

Can’t get to SQLBits? They publish the videos later on, so your vote still counts.

dbatools

These are the ones that I’m pretty sure focus on or use dbatools.

dbatools and Power BI walked into a bar by Cláudio Silva

dbatools’ recipes for Data Professionals by Cláudio Silva

Easy Database restores with dbatools by Stuart Moore

Extending the Data Platform with PowerShell, an introduction by Craig Porteous

Fundamental skills for the modern DBA by John McCormack

Install & Configure SQL Server with PowerShell DSC by Jess Pomfret

Life Hacks: dbatools edition by Jess Pomfret

Mask That Data! by Sander Stad

Monitoring SQL Server without breaking the bank by Gianluca Sartori

Start to See With tSQLt by Sander Stad

SQL Notebooks in Azure Data Studio for the DBA by Rob Sewell

SQL Server on Linux from the Command Line by Randolph West

The DBA Quit and Now You’re It: Action Plan by Matt Gordon

PowerShell

Not sure if these use dbatools but I know they use PowerShell  .

.

6 Months to 5 Minutes, Faster Deploys for Happier Users by Jan Mulkens

Application and Database Migrations-A Pester Journey by Craig Ottley-Thistlethwaite

ARM Yourself: A beginners guide to using ARM Templates by Jason Horner

Data Protection by design: Creating a Data Masking strategy by Christopher Unwin

Fun with Azure Data Studio by Richard Munn

Introducing Azure Data Studio (SQL Operations Studio) by Rich Benner

Introduction to PowerShell DSC by John Q Martin

Introduction to Infrastructure as Code with Terraform by John Q Martin

Scripting yourself to DevOps heaven by David L. Bojsen

SSDT databases:Deployment in CI/CD process with Azure DevOps by Kamil Nowinski

Supercharge your Reporting Services: An essential toolkit by Craig Porteous

The quick journey from Power BI to automated tests by Bent Nissen Pedersen

Turn Runbooks into Notebooks: ADS for the On-Call DBA by Matt Gordon

The code

How did I get this list? With PowerShell of course!

# use basic parsing to make sure I write it to support Linux as well

$bits = Invoke-WebRequest -Uri https://sqlbits.com/information/publicsessions -UseBasicParsing

$links = $bits.Links | Where href -match event20

$sessions = $results = @()

# Make a cache to play around with, before final code, otherwise you just keep downloading all webpages

foreach ($link in $links) {

$url = $link.href.Replace('..','https://www.sqlbits.com')

$sessions += [pscustomobject]@{

Url = $url

Title = ($link.outerHTML -split '<.+?>' -match '\S')[0]

Page = (Invoke-WebRequest -Uri $url -UseBasicParsing)

}

}

foreach ($session in $sessions) {

$url = $session.Url

$title = $session.Title

# this is a case sensitive match

$speaker = ((($session.Page.Links | Where href -cmatch Speakers).outerHTML) -split '<.+?>' -match '\S')[0]

if ($session.Page.Content -match 'dbatools') {

$results += [pscustomobject]@{

Title = $title

Link = "[$title by $speaker]($url)"

Category = "dbatools"

}

}

if ($session.Page.Content -match 'powershell' -and $session.Page.Content -notmatch 'dbatools') {

$results += [pscustomobject]@{

Title = $title

Link = "[$title by $speaker]($url)"

Category = "PowerShell"

}

}

}

$results | Sort-Object Category, Title | Select-Object -ExpandProperty Link | clip

Haven’t been to SQLBits? It’s genuinely one of the awesomest conferences and I highly recommend it, both as a speaker and an attendee.

Chrissy

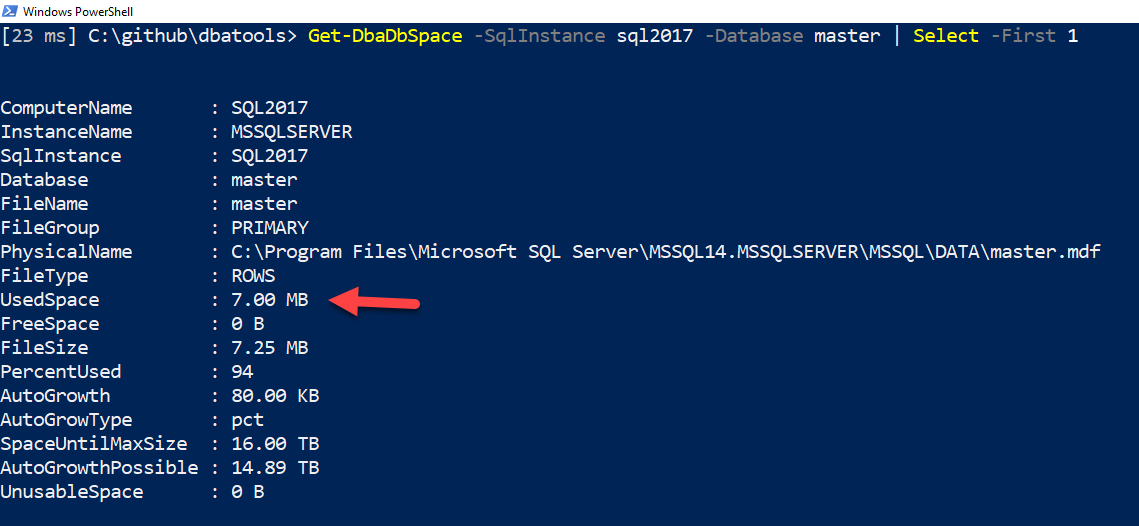

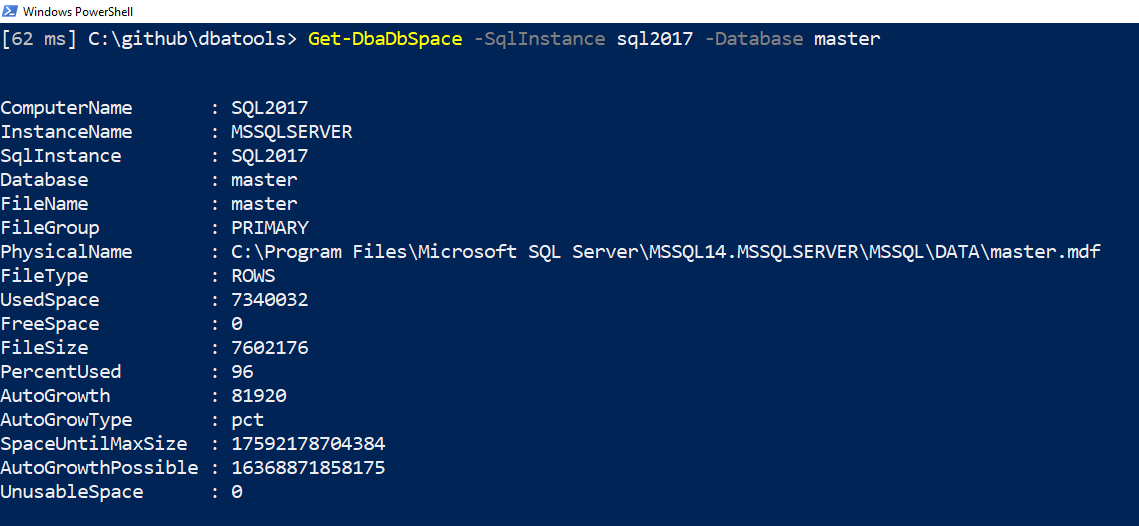

]]>That was some C# based magic created by Microsoft PFE and creator of PSFramework, Fred Weinmann. In the background, SQL Server often gives us different types of numbers to represent file sizes. Sometimes it’s bytes, sometimes it’s megabytes. We wanted to standardize the sizing in dbatools, and thus the dbasize type was born.

Usage

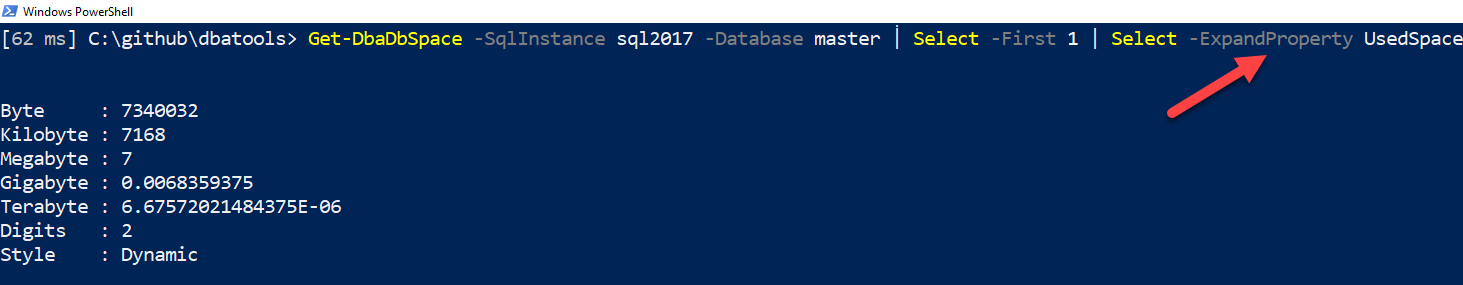

This size type is cool because it looks beautiful, showing KB, MB, GB, TB and PB. But it’s also packed with usable data behind-the-scenes. This can be seen when you expand the property, either by using .ColumnName or Select -ExpandProperty ColumnName.

This means that you don’t have to parse the results to get the bits and bytes – it’s all there in the background. Here’s the code used in the above screenshot:

# Evaluate UsedSpace details Get-DbaDbSpace -SqlInstance sql2017 -Database master | Select -First 1 | Select -ExpandProperty UsedSpace

When using this type in practice, your code will likely look something like this:

# Use the type with Where-Object

Get-DbaDbSpace -SqlInstance sql2017 | Where-Object { $_.UsedSpace.Megabyte -gt 10 }

# Write it to file using foreach

Set-Content -Path C:\temp\mb.csv -Value 'Name,UsedMB'

foreach ($file in (Get-DbaDbSpace -SqlInstance sql2017 -Database master)) {

$name = $file.Database

$usedmb = $file.UsedSpace.Megabyte

Add-Content -Path C:\temp\mb.csv -Value "$name,$usedmb"

}

# Write it to CSV using calculated properties

Get-DbaDbSpace -SqlInstance sql2017 -Database master |

Select-Object -Property Database, @{ Name = 'UsedMB'; Expression = { $_.UsedSpace.Megabyte } } |

Export-Csv -Path C:\temp\mb.csv -NoTypeInformation

Configuration

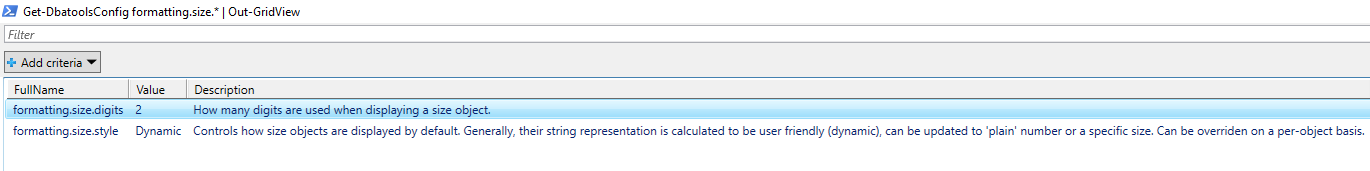

You can also configure the output. Want more than 2 numbers after the decimal points? Can do! Don’t want the human-readable display by default? It can be disabled using Set-DbatoolsConfig

# Get the two properties you'll be working with Get-DbatoolsConfig formatting.size.* | Out-GridView

This ultimately shows details for formatting.size.digits and formatting.size.style.

formatting.size.digits

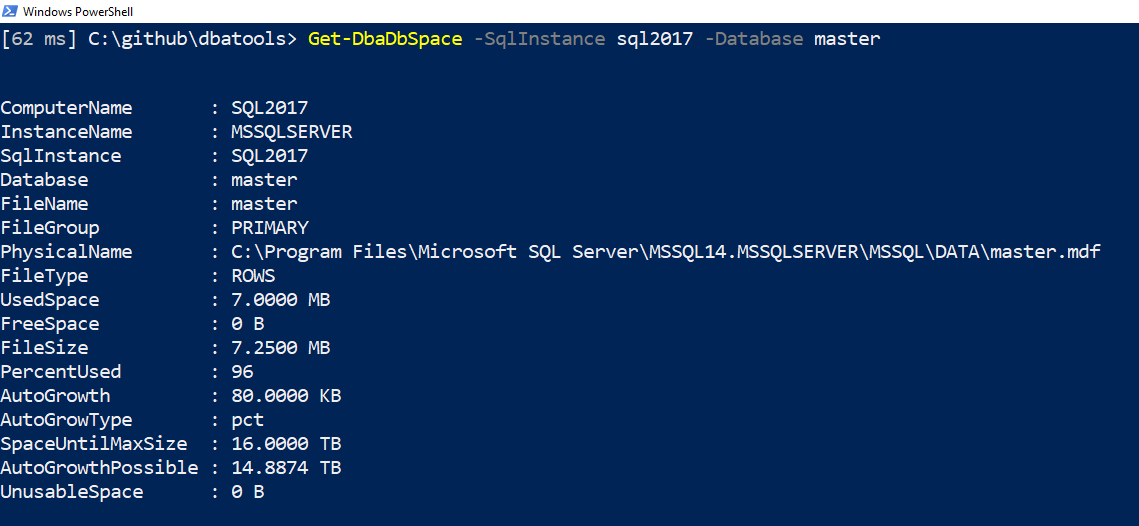

This setting controls how many digits are displayed after the decimal. By default, two digits are shown. Let’s change that to four.

# Change value to 4 Set-DbatoolsConfig -FullName formatting.size.digits -Value 4 | Register-DbatoolsConfig

Piping to Register-DbatoolsConfig persists the value across sessions. Otherwise, your digits would revert back to two when you create a new session.

Now you can see that there are 4 digits after the decimal! Cool. I didn’t even realize this before writing this blog post

formatting.size.style

By default, we use the “Dynamic” styling size. This basically means that we’ll show file sizes similar to way Explorer does: the biggest size is used. So if something is 2.5 megabytes, it won’t use B or KB, but 2.5 MB.

Now let’s disable styling altogether and show values in bytes, but only for the current session.

# Removing formatting, show in bytes Set-DbatoolsConfig -FullName formatting.size.style -Value plain

Note in the screenshot above that UsedSpace is now an unformatted number.

Prefer that everything be displayed in terabytes by default? We support that too.

# Set default value to terabyte Set-DbatoolsConfig -FullName formatting.size.style -Value Tb

Here are all the options available:

- Dynamic

- Plain

- Byte

- B

- Kilobyte

- KB

- Megabyte

- MB

- Gigabyte

- GB

- Terabyte

- TB

If you’re wondering how I got that, I researched how to show an enum in PowerShell, found this TechNet article then executed:

# Use .NET to enumerate the available values of SizeStyle [System.Enum]::GetNames([Sqlcollaborative.Dbatools.Utility.SizeStyle])

Hope that helps with number formatting in dbatools! And thanks to Fred for such a beautiful, standardized way to show numbers

- Chrissy

]]>For years, people have asked if any dbatools books are available and the answer now can finally be yes, mostly  . Learn dbatools in a Month of Lunches, written by me and Rob Sewell (the DBA with the beard), is now available for purchase, even as we’re still writing it. And as of today, you can even use the code bldbatools50 to get a whopping 50% off (valid forever).

. Learn dbatools in a Month of Lunches, written by me and Rob Sewell (the DBA with the beard), is now available for purchase, even as we’re still writing it. And as of today, you can even use the code bldbatools50 to get a whopping 50% off (valid forever).

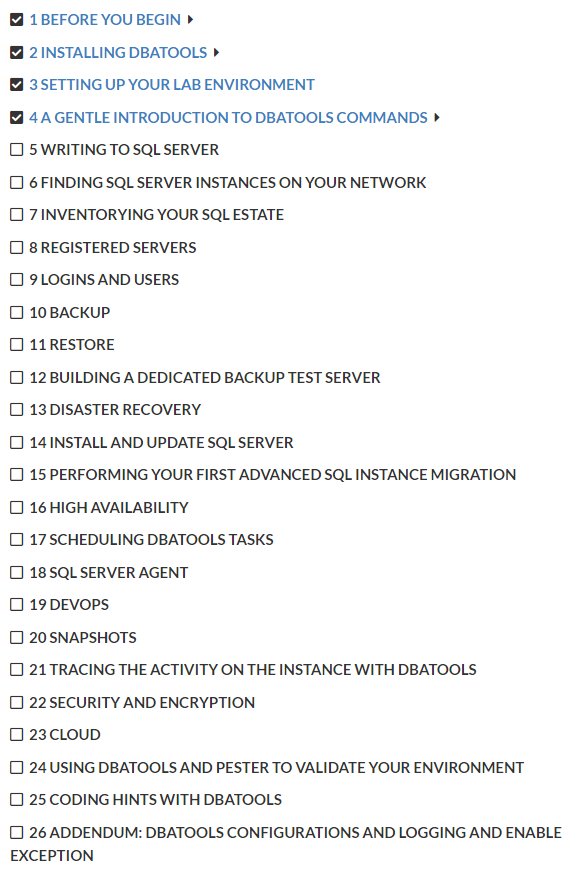

Right now, the first few chapters are available, and we’re going to release a new chapter at least once a month. Here’s our current Table of Contents.

What is MEAP?

Manning’s Early Access Program was introduced in 2003, because the publisher “found it frustrating that while authors were working on later chapters, the earlier ones sat gathering dust, of no use to anyone.”

According to the website, MEAP offers several benefits over the traditional “wait to read” model.

- Get started now. You can read early versions of the chapters before the book is finished.

- Regular updates. We’ll let you know when updates are available.

- Get finished books faster. MEAP customers are the first to get final eBooks and pbooks.

What I appreciate as an author is that this allows early-adopters to become part of the writing process and can help make the book even better. As Manning puts it:

The content you get is not finished and will evolve, sometimes dramatically, before it is good enough for us to publish. But you get the chapter drafts when you need the information. And, you get a chance to let the author know what you think, which can really help both them and us make a better book.

Occasionally, Manning will decide not to publish a book that’s become available in MEAP. Fear not! If they cancel your MEAP, you can exchange it for another book or get a refund.

Thanks

For those 300+ of you who have already purchased our book, Rob and I very much appreciate your support and hope that you find the book super useful

Also, shoutout to Mike Shepard (b | t) and Cláudio Silva (b | t) for the technical edits and to the anonymous community editors who took the time to review our book before it went to MEAP!

- Chrissy

]]>Setup

Imagine this scenario:

Source (APPSQL1)

- Dedicated SQL Server 2008 R2 failover clustered instance with a non-default collation (SQL_Latin1_General_CP1_CI_AI)

- Custom .NET application with an intense database containing a non-default collation (also SQL_Latin1_General_CP1_CI_AI), multiple CLR assemblies, and thousands of tables, views, stored procedures, functions

- Nearly 30 SQL Agent jobs, many of which had to be disabled over the years due to compatibility issues and scope changes

- Out-of-support for both Microsoft and the application vendor

Destination (APPSQL2)

- Shared server

- SQL Server 2017

- Default collation (SQL_Latin1_General_CP1_CI_AS)

There was even a linked server in the mix, but our biggest concerns revolved around the changing collation and the Agent jobs, which were known to be brittle.

The destination test server was an existing shared server, which mirrored the scenario that would play out in production. And while the databases only needed to exist on the new server for a limited period of time, these migrated databases were going to be the most important databases on the entire instance. This meant that the SQL Server configs were going to have to cater to this app’s needs. One exception was the collation, as the accent sensitivity was determined not to be a big deal and the vendor agreed.

Interesting requirements, no doubt!

Prep

Fortunately, my colleague kept a login inventory, and we used this to determine that there would be 5 servers with connection strings that’d have to be updated with the new server name. Generally, I try to see if creating SQL client aliases is a suitable solution and this was no exception. Creating SQL aliases satisfies my curiosity and can let us know early on if the migration was a success.

This blog post refers to options added in 1.0.34. If you’d like to follow along, please ensure you’ve updated to the latest version of dbatools.

So let’s take a look at the prep work.

# Ensure PowerShell remoting is available (Test-DbaConnection would also work)

Invoke-Command -ComputerName server1, server2, server3, server4, server5 -ScriptBlock { $env:computername }

# See current aliases to ensure no overlap

Get-DbaClientAlias -ComputerName server1, server2, server3, server4, server5

# Export / Document all instance objects from source SQL Server

Export-DbaInstance -SqlInstance APPSQL1, APPSQL2

# Ensure no jobs are currently running

Get-DbaRunningJob -SqlInstance APPSQL1

# Perform final log backup

Start-DbaAgentJob -SqlInstance APPSQL1 -Job "DatabaseBackup - USER_DATABASES - LOG"

Since the old server was going to be turned off entirely, I thought it’d be a good idea to export all of the objects (logins, jobs, etc) so that the DBA could see the state they were in at the time of migration. While we were at it, we threw in the destination server as well.

Once the final log backup was made, it was time to perform the migration!

Migration

Because the destination server was a shared server, it wasn’t a likely candidate for Start-DbaMigration as this command is intended for instance-to-instance migrations. In general, when I migrate to shared servers, I’ll run each individual Copy-Dba command for things like databases, logins and jobs.

In this case, however, I recommended Start-DbaMigration since the application was high-priority and I wanted the migration to be as thorough as possible. I knew that the SQL Server configuration values would change, but because they were exported when we ran Export-DbaInstance, we could easily reference old settings.

I did decide to exclude a few things that could needlessly pollute the new server like user objects in the system databases (Copy-DbaSysDbUserObject) and I also excluded the Agent Server Properties, because the destination server properties (like fail-safe operator) were already set.

# Start Migration - view results in a pretty grid, and assign the results to a variable so that it can be easily referenced later

Start-DbaMigration -Source APPSQL1 -Destination APPSQL2 -UseLastBackup -SetSourceReadOnly -Exclude SysDbUserObjects, AgentServerProperties, PolicyManagement, ResourceGovernor, DataCollector | Select-Object * -OutVariable migration | Out-GridView

# Create new alias

New-DbaClientAlias -ComputerName server1, server2, server3, server4, server5 -ServerName APPSQL2 -Alias APPSQL1

# Prep post migration potential failure/fallback

# Set-DbaDbState -SqlInstance APPSQL11 -AllDatabases -ReadWrite -Force

# Get-DbaClientAlias -ComputerName server1, server2, server3, server4, server5 | Where AliasName -eq APPSQL11 | Remove-DbaClientAlias

Post-migration

So the migration went decently well. While we didn’t have to use the fallback commands, we did have to investigate a couple failures.

Failed job migration

A couple jobs didn’t migrate because they were rejected by the new server. Seems that scripted export code referenced “server=”, which was invalid because it contained the name of the old server.

# Find jobs causing issues

Get-DbaAgentJobStep -SqlInstance APPSQL1 | Where Command -match "@server=N'APPSQL1'"

# Nope that didn't work. Oh, wait, I need the export code, not the command code.

$jobs = $migration | Where-Object { $psitem.Type -eq "Agent Job" -and $psitem.Status -eq "Failed" } | Select -ExpandProperty Name

Get-DbaAgentJob -SqlInstance APPSQL1 -Job $jobs | Export-DbaScript -Passthru -Outvariable tsql | clip

The above code resulted in the T-SQL CREATE scripts being added to the clipboard. We then pasted that code into SSMS, performed a find/replace for @server=N’APPSQL1′ with @server=N’APPSQL2′ then executed the T-SQL. Boom, it worked. Jobs were created and scheduled. I also updated dbatools to avoid this failure in the future.

While I used SSMS to perform the find/replace, this could have been done in PowerShell as well.

$tsql = $tsql -Replace "@server=N'APPSQL1'","@server=N'APPSQL2'"

Invoke-DbaQuery -SqlInstance APPSQL2 -Query $tsql

Now we needed to run each of the newly created jobs to see if they work, as it make me feel more confident in the migration’s success. After evaluating each job’s purpose, we determined it would be okay to run every job outside of their scheduled execution times.

# Start newly created jobs that are enabled (the lazy way), wait for them to finish

Get-DbaAgentJob -SqlInstance APPSQL2 | Where CreateDate -gt (Get-Date).AddMinutes(-60) | Where Enabled | Start-DbaAgentJob -Wait

# Ugh, looks like we have some failed executions. Were any of these jobs failing prior to migration?

Get-DbaAgentJob -SqlInstance APPSQL1 | Where LastRunOutcome -ne "Succeeded" | Where Enabled | select Name

Nooo, all the failed jobs ran successfully on the APPSQL1! Now we’d have to dig into the code to see what code is failing and how it can be fixed. I imagined the failures were due to collation issues and I was right. Good ol’ Cannot resolve the collation conflict between….

Maybe we can update the collation in the databases? I recall years ago someone asked for this functionality in dbatools but thoroughly changing the collation of an existing database is so complicated as it requires changing the collation of so many objects including tables and indexes.

Let’s try changing the database collation anyway, just to see if it’s possible and if it helps. This was mostly to satisfy my curiosity.

# first we have to kill the connections then attempt a collation change in SSMS

Get-DbaProcess -SqlInstance APPSQL1 -Database appdb | Stop-DbaProcess

Nope. Our attempted collation change using SSMS didn’t even work because there were some dependent features in a couple of the databases. So now we had to find and edit the impacted views.

# Omg most of the database objects are encrypted 🙄, don't we have a command for that?

Get-Command -Module dbatools *crypt*

# Yesss, Invoke-DbaDbDecryptObject. Thanks Sander!

Get-Help -Examples Invoke-DbaDbDecryptObject

# Run it

Invoke-DbaDbDecryptObject -SqlInstance APPSQL2 -Database appdb -Name vwImportantView

# Oh darn, DAC is not enabled and Invoke-DbaDbDecryptObject needs DAC

Set-DbaSpConfigure -SqlInstance APPSQL2 -ConfigName RemoteDacConnectionsEnabled -Value 1

# Run again

Invoke-DbaDbDecryptObject -SqlInstance APPSQL2 -Database appdb -Name $names

# Turn DAC back off

Set-DbaSpConfigure -SqlInstance APPSQL2 -ConfigName RemoteDacConnectionsEnabled -Value 0

We decrypted the views then added COLLATE DATABASE_DEFAULT to some queries, altered the views and voila! We were set. After the jobs ran successfully, we handed the migration off to the application team.

SSPI failures

The application team immediately handed it right back to us  . Seems they encountered some “Cannot Generate SSPI Context” failures. Wait, what? The SPNs are set, right?

. Seems they encountered some “Cannot Generate SSPI Context” failures. Wait, what? The SPNs are set, right?

# Double-checking all of the SPNs are set

Test-DbaSpn -ComputerName APPSQL2

# Looks great! So is it just the app or can we make any kerberos connections using PowerShell?

# Maybe this is because they are using a SQL Client alias?

Test-DbaConnectionAuthScheme -SqlInstance APPSQL2

Oh, no: our dbatools connection was also using NTLM. So we checked to see if there were some stale DNS records. Nope. Cleaned out the Kerberos tickets. Works? Still no. Triple checked with Microsoft’s tools and really, Kerberos should be working.

Turns out it was an issue with a setting in Windows (didn’t record which, oops), and after that was adjusted, Kerberos worked!

Now for the application

After confirming that Kerberos was totally working, I removed the newly created SQL Client aliases and they updated all of the connection strings. Still there were problems. The error message was written in en-gb (“initalised”) so I figured it was an application error. If this was an error coming from SQL Server or IIS, it would have been written in en-us.

We figured that if the error is referencing database initialization, there must be some config table that has the name of the server. What a nightmare. Now we have to search through the databases, guessing at the potential name for this configuration object.

# I bet it's one of those configuration tables.

Get-DbaDbTable -SqlInstance APPSQL2 -Database appdb | Where Name -match config

# No good candidates. Let's look thru the stored procedures.

Find-DbaStoredProcedure -SqlInstance APPSQL2 -Database appdb -Pattern config

# Oops, forgot they were all encrypted. That didn't return anything.

# Search views, stored procedures and triggers then save the candidates' names to a variable so they can be passed to Invoke-DbaDbDecryptObject

Get-DbaDbModule -SqlInstance APPSQL2 -Database appdb -Type View, StoredProcedure, Trigger | Out-GridView -Passthru | Select-Object -ExpandProperty Name -OutVariable names

Invoke-DbaDbDecryptObject -SqlInstance APPSQL2 -Database appdb -Name $names

Oh, la la! We found a candidate, updated the values and we were back in action! The migration was successful and the test results were accepted. With this knowledge, they were able to quickly perform a the production migration.

Out of curiosity

What was your most challenging migration with dbatools like? How often do you have to modify jobs and database objects like stored procedures/views?

- Chrissy

Since dbatools is command-line based, you can view/modify your current options using our various config commands.

- Get-DbatoolsConfig

- Get-DbatoolsConfigValue

- Set-DbatoolsConfig

- Reset-DbatoolsConfig

- Import-DbatoolsConfig

- Export-DbatoolsConfig

- Register-DbatoolsConfig

- Unregister-DbatoolsConfig

We offer the ability to configure various options such as connection timeouts, datetime formatting and default export paths. All-in-all, we offer nearly 100 configuration options to help customize and enhance your dbatools experience.

Exploring our options

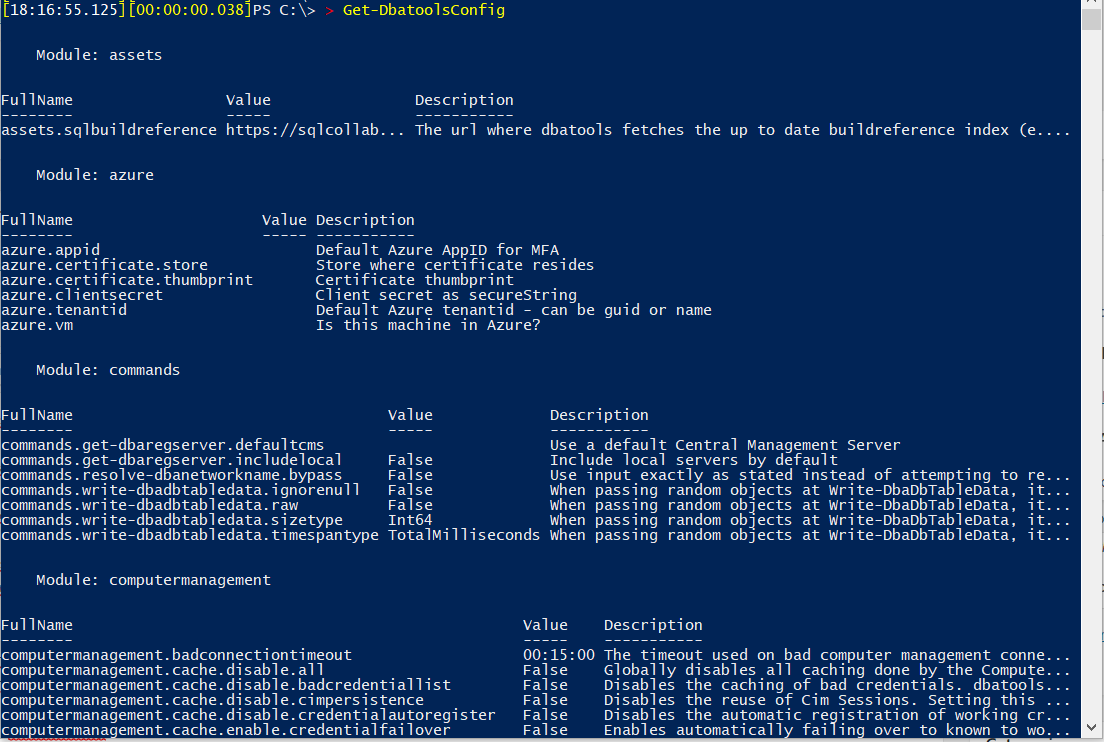

To see a list of all of the options offered, open a PowerShell console and type Get-DbatoolsConfig.

In the image above, you can find some of the configuration options that you can set and will be widely used by dbatools.

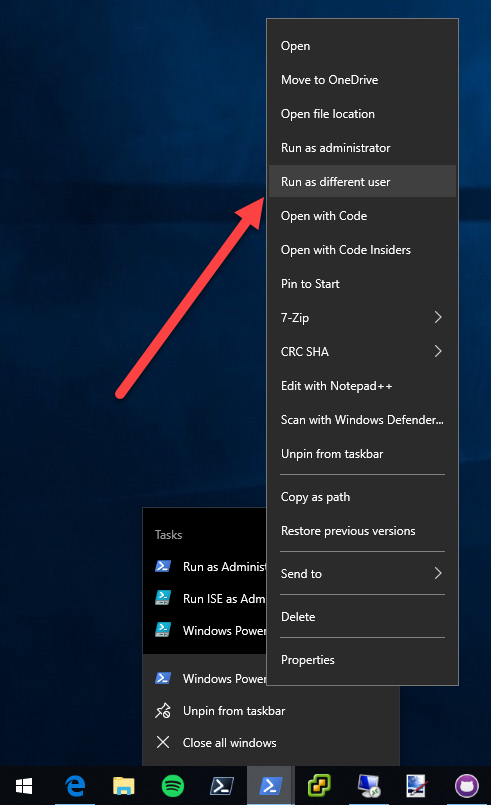

These settings are scoped at the session-level. This means that if you use dbatools module within SQL Agent jobs and you have a different account to run it, you will need to set the options for the user/credential running your SQL Agent jobs. You can do this by logging into the server as that user and editing the options. You can also run PowerShell as that user by right-clicking the PowerShell logo in the taskbar, then pressing Shift + right-click over the PowerShell logo. There, you will be offered the option to run as a different user.

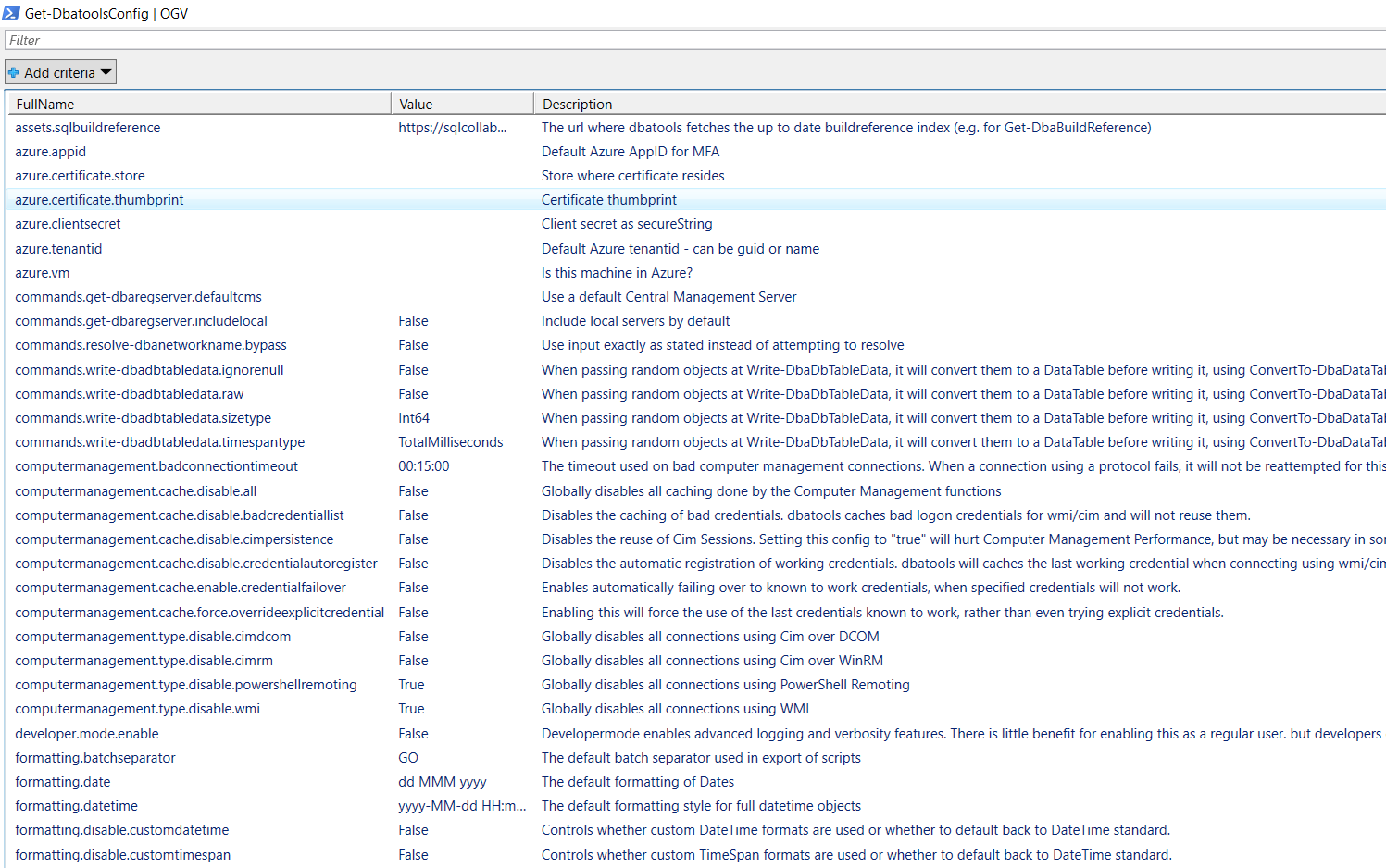

Because there are nearly 100 configuration options, you can take advantage of Out-Gridview to easily view and sort them.

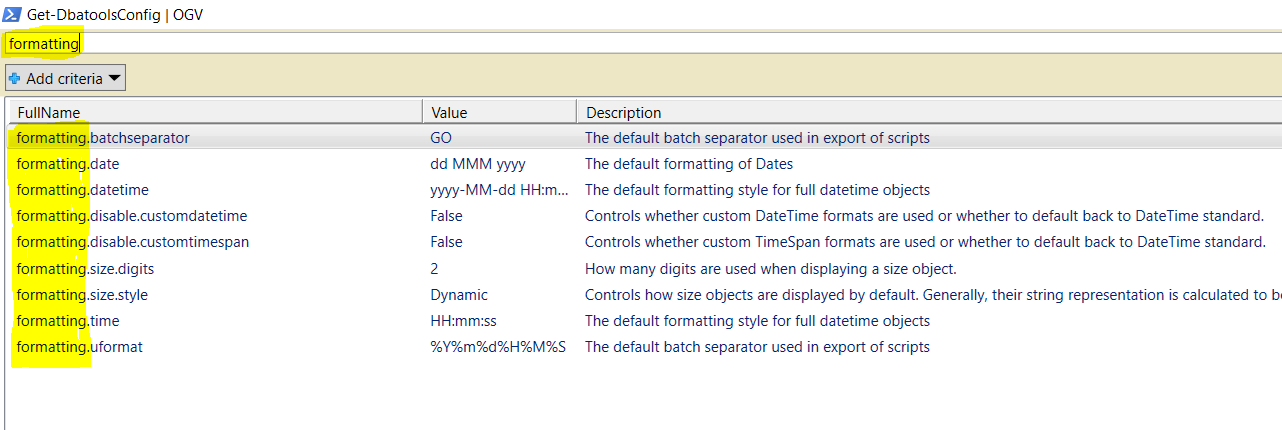

You can then filter out the results in a easier manner.

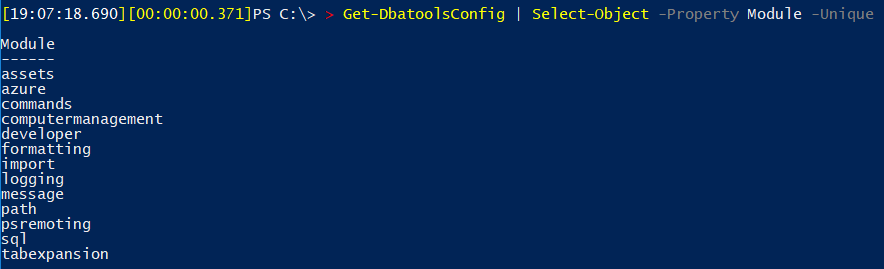

“I saw that settings are grouped by modules. Whichs modules are available?”

If you run the following command, you will find all the distinct modules we have:

Get-DbatoolsConfig | Select-Object -Property Module -Unique

As you can see there are a good amount of different modules.

Get-DbatoolsConfig vs. Get-DbatoolsConfigValue

You may have noticed two similarly named commands: Get-DbatoolsConfig and Get-DbatoolsConfigValue. Let’s take a look at the differences.

Get-DbatoolsConfig – a rich object

This command returns not only the value for a setting but also the module where it belongs and a useful description about it.

You can specify the -FullName (preferable because it is unique) or only the -Name (same as -FullName but without the prefix which is the module name).

-FullName example:

Get-DbatoolsConfig -FullName sql.connection.timeout

-Name example:

Get-DbatoolsConfig -Name connection.timeout

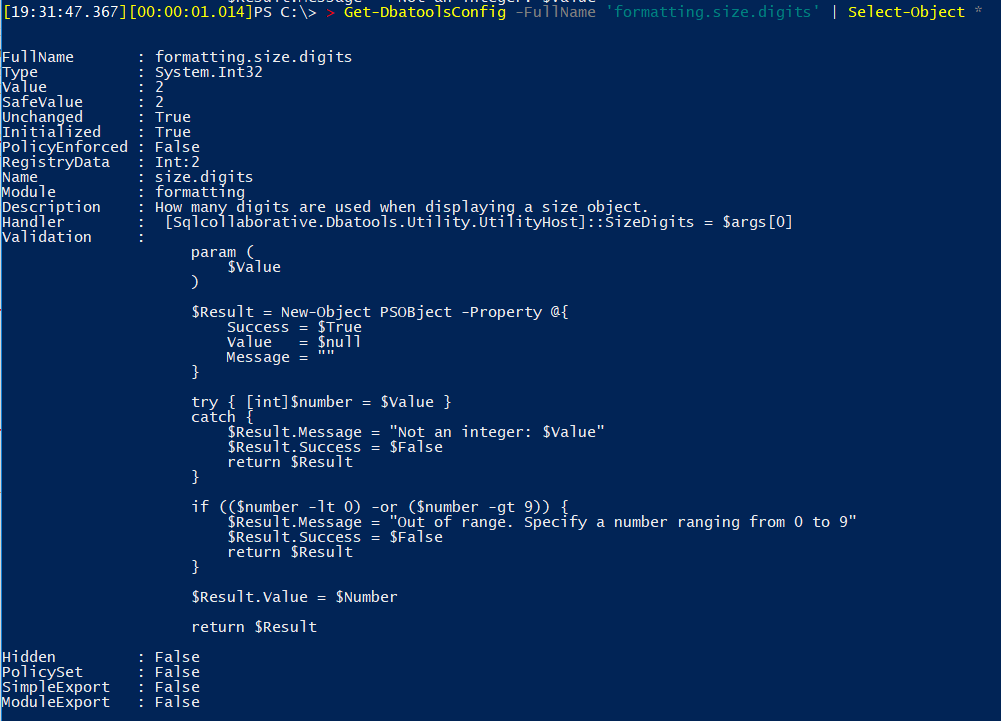

We also provide a validation script to a configuration. If you want to know how we validate it, you can see the Validation property, as example if you run:

Get-DbatoolsConfig -FullName formatting.size.digits | Select-Object *

You will get:

Get-DbatoolsConfigValue – Is what you are thinking but we have interesting options

If you just want to get the configured value as a string, you should use the Get-DbatoolsConfigValue command. This command is widely used internally within the dbatools module.

You may find that we also use the -FallBack, as stated on documentation “A fallback value to use, if no value was registered to a specific configuration element. This basically is a default value that only applies on a “per call” basis, rather than a system-wide default.”

Also if we don’t want to get a null result we can specify the -NotNull parameter “…the function will throw an error if no value was found at all.”

Set a new configuration value

To update a value you need to use the Set-DbatoolsConfig command. Unfortunately, you will not find documentation for this command on our docs page. This is a known issue and it happens because that command is a cmdlet so the help is in the dbatools library itself.

For this particular case, you can and should rely on the Get-Help command.

Get-Help -Name Set-DbatoolsConfig -Full

The easier way to update a value is provide the -FullName and -Value parameters. Example:

Set-DbatoolsConfig -FullName formatting.size.digits -Value 3

Note that changes made by Set-DbatoolsConfig only persists for the current session. To permanently persist your changes, use Register-DbatoolsConfig. This command will be covered next.

Let’s see other examples

Want to clean the logs more frequently? Or keep them longer than the default of 7 days? Let’s change to 8 days by setting the Logging.MaxLogFileAge configuration.

Set-DbatoolsConfig -FullName Logging.MaxLogFileAge -Value (New-TimeSpan -Days 8)

Want to format dates and times differently?

Take a look at the formatting section and find a couple of configurations to set the format as you would like.

If you want to change from dd MMM yyyy format to yyyy MM dd you can run the following command:

Set-DbatoolsConfig -FullName formatting.date -Value 'yyyy MM dd'

What about sqlconnection timeout?

15 seconds (the default) is not enough? Change it using the sql.connection.timeout configuration.

Set-DbatoolsConfig -FullName Logging.MaxLogFileAge -Value 30

Do you use dbatools with Azure?

There are a couple of configs that you can set in the Azure section. Take a look at them:

Get-DbatoolsConfig -Module azure

Note that only a few of our commands have been tested to work with Azure. Better support for Azure is a long-term goal.

Permanently persist changes

As mentioned previously, when you use the Set command, it is set only for your current session. To make the change permanent, use Register-DbatoolsConfig.

Get-DbatoolsConfig | Register-DbatoolsConfig

This will write all configuration values, by default, in the registry.

Like all of our config commands, this works on Windows, Linux and macOS.

Reset configured value to its default

To reset all of your configured values to dbatools default, run the following:

Reset-DbatoolsConfig -FullName sql.connection.timeout

This will set the configuration value back to 15. To reset all of your dbatools options to default, run the following:

Get-DbatoolsConfig | Reset-DbatoolsConfig

What’s new?

Since last dbatools version 1.0.32, new PowerShell Remoting configurations are available. You can read more about it on my recent blog post More PowerShell Remoting coverage in dbatools to learn more.

Just remember…

Next time you catch yourself thinking about changing some default behaviour remember to take a look into these configurations. If you found something that is not available for your use case, talk with us on Slack or just open an feature request explaining your needs and we will try to guide/help you.

Thanks for reading!

Cláudio

This month’s T-SQL Tuesday is hosted by Tracy Boggiano ([b]|[t]), is all about Linux.

dbatools and linux

As a long-time Linux user and open-source advocate, I was beyond excited when PowerShell and SQL Server came to Linux.

A few of the decisions I made about dbatools were actually inspired by Linux. For instance, when dbatools was initially released, it was GNU GPL licensed, which is the same license as the Linux kernel (we’ve since re-licensed under the more permissive MIT). In addition, dbatools’ all-lower-case naming convention was also inspired by Linux, as most commands executed within Linux are in lower-case and a number of projects use the lower-case naming convention as well.

dbatools on linux

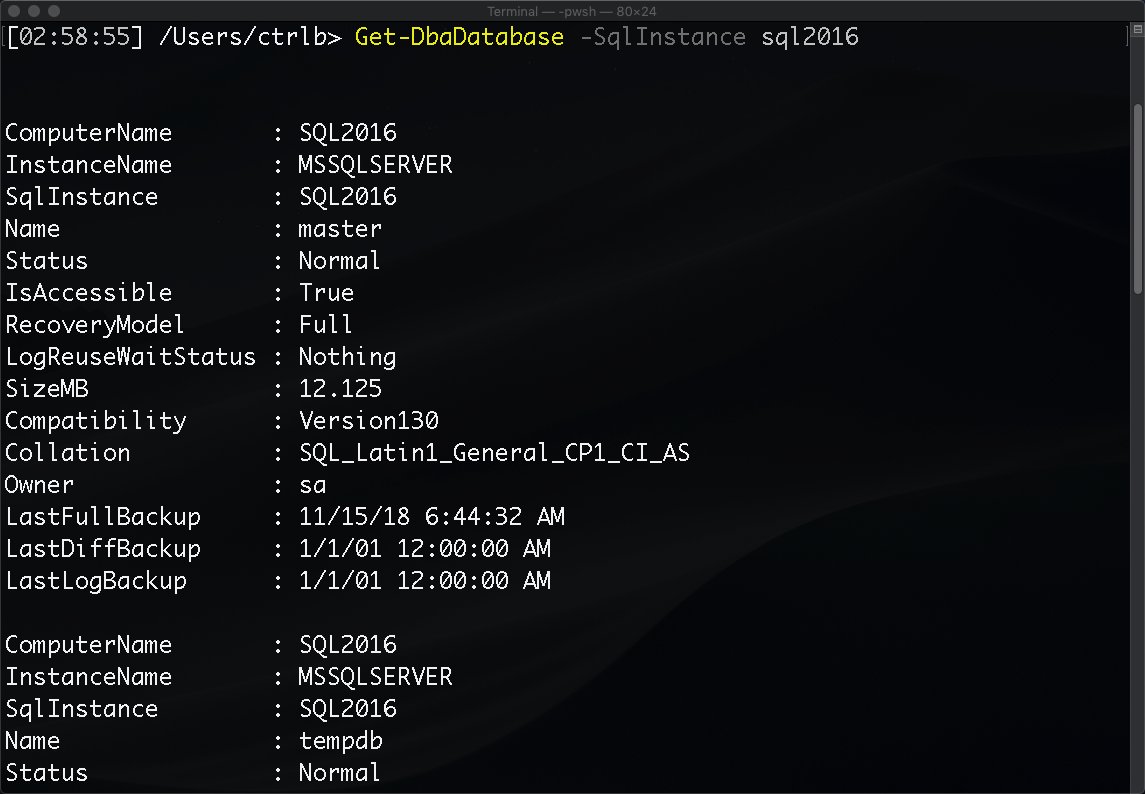

Thanks to Microsoft’s PowerShell and SQL Server teams, dbatools runs on Linux and mac OS!

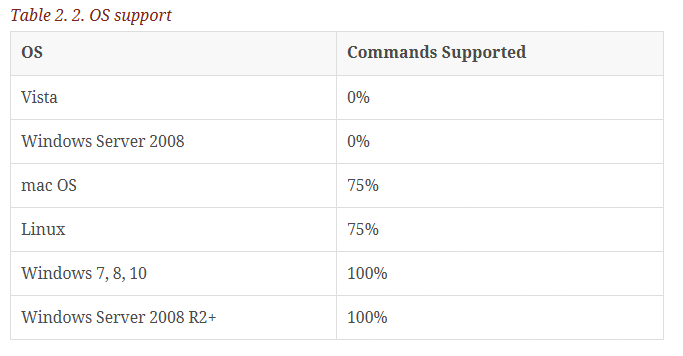

As covered in our book which’ll be released next year, dbatools in a Month of Lunches, 75% of commands in dbatools are supported by Linux and mac OS.

Commands that are pure-SQL Server, like Get-DbaDatabase or New-DbaLogin, work on Linux and mac OS. Others that rely on WMI or remoting, like Get-DbaDiskSpace or Enable-DbaAgHadr, do not currently work on Linux.

If you’re curious, our docs site will tell you if a command is supported by Linux and mac OS.

And, of course, you can also ask on Linux itself. After installing dbatools, run Get-Command -Module dbatools to see all of the commands that are available.

sql on linux

dbatools also supports SQL on Linux. If it works on SQL Server Management Studio, it’ll work with dbatools as we’re built on the same libraries. Also because SQL on Linux is pretty much the same everything as SQL on Windows.

Interested in learning more? Check out the #1 rated SQL Server on Linux book, Pro SQL Server on Linux by Microsoft’s Bob Ward. This book was actually edited by our friend Anthony Nocentino and it’s rumored that Anthony will contribute some sweet Linux-centric commands to dbatools when the Year of the Linux Desktop arrives, so we’re looking forward to that

Kidding aside, dbatools supports Linux and mac OS in a number of ways. Not only can you run dbatools FROM Linux, you can also connect TO SQL on Linux. We even have fantastic Registered Server support that eases authentication.

to linux / mac os

Connecting to SQL Server (Windows or Linux) from Linux or mac OS is generally done with using an alternative -SqlCredential. So let’s say you follow Microsoft’s guide to setting up SQL on Linux or if you use dbatools’ Docker guide, you’ll likely need to authenticate with the sa or sqladmin account. Execute the following command.

Get-DbaDatabase -SqlInstance sqlonlinux -SqlCredential sa

You’ll then be prompted for your password, and voilà! Don’t want to type your password every time? You can reuse it by assigning the credential to a variable.

$cred = Get-Credential sa

Get-DbaDatabase -SqlInstance sqlonlinux -SqlCredential $cred

You can also export your credentials to disk using Export-CliXml. The password will be encrypted and only the same user/computer can decrypt.

Get-Credential sa | Export-CliXml -Path C:\temp\creds.xml

$cred = Import-CliXml -Path C:\temp\creds.xml

Get-DbaDatabase -SqlInstance sqlonlinux -SqlCredential $cred

Prefer using the Windows Credential Store instead? Check out the PowerShell module, BetterCredentials.

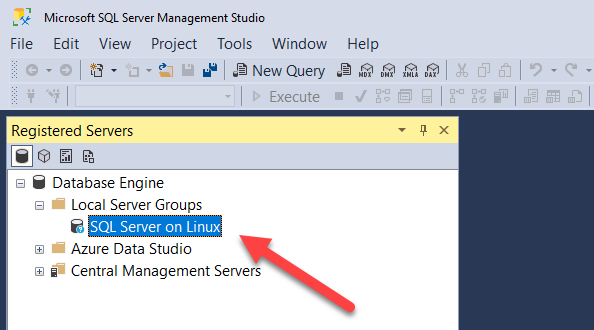

to linux / mac os using registered servers

You can also use Local Registered Servers! This functionality was added in dbatools 1.0

Connect-DbaInstance -SqlInstance sqlonlinux -SqlCredential sa | Add-DbaRegServer -Name “SQL Server on Linux”

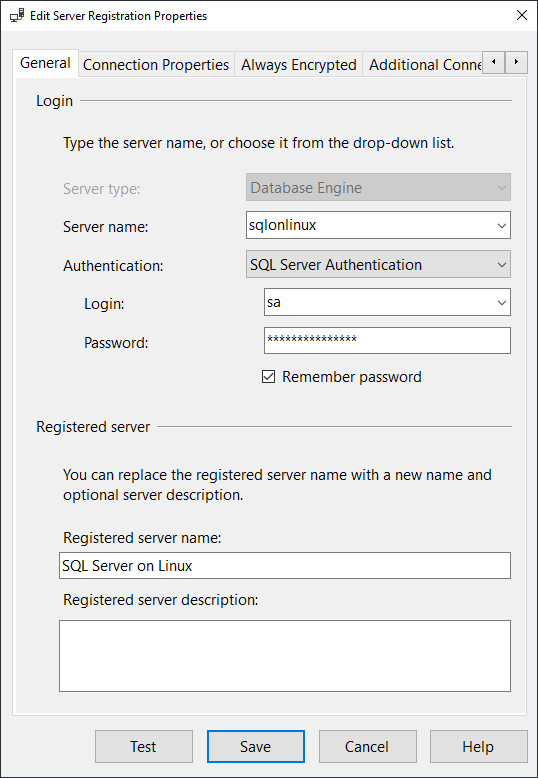

From there, you can see it in SQL Server Management Studio’s Local Server Groups.

And the detailed pane

Ohhh! What! Now you can do this without worrying about authentication:

Get-DbaRegServer -ServerName sqlonlinux | Get-DbaDatabase

from dbatools on mac os to sql server on windows

Something that I had super fun doing was joining my Mac Mini to my homelab then authenticating flawlessly with my domain-joined, Windows-based SQL Server. Didn’t even need the -SqlCredential because Integrated Authentication worked

If you cannot join your Linux or mac OS workstation to a Windows domain, you can still use -SqlCredential. This parameter not only supports SQL Logins, but Windows logins as well. So you could connect to a Windows-based SQL Server using an Active Directory account such as ad\sqladmin.

Got any questions, hit up #dbatools on the SQL Server Community Slack at aka.ms/sqlslack and one of our friendly community members will be there to assist.

- Chrissy

dbatools is smart. It can do things in the background when you’re using the commands, like cache connections and also cache the results of those connections. This can help speed things up as it will re-use the existing object.

But what if something changed since it cached the connection? Let’s see how we can edit the existing connections if necessary.

My scenario

I was updating some dev instances using what has to be one of my favorite commands, Update-DbaInstance. Here’s what my command looked like:

Update-DbaInstance -ComputerName devbox1.domain.local -Restart -Path "\\fileshare\sql updates" -Credential ad\gareth

For anyone new to dbatools, let’s briefly talk about what this is doing for us based on this example.

- Prompt us for

ad\gareth‘s password - Find all SQL Server instances on devbox1

- Search fileshare “\fileshare\sql updates” for updates relevant to any instances we found

- If a Windows restart is required before installing patches, then restart

- Prompt the user to install patches for any instances that were found and need updating

- Finally, it will restart the computer after patching

Works like a charm! For more details and examples, you can check out the Update-DbaInstance help page.

Back on topic

I came to update one instance, and my credentials were not working, I received an Access Denied message in PowerShell. I did what was necessary to get my permissions added on the windows server, confident I was good to go I tried again, but still no luck. This time the error was slightly different, “Windows authentication was used, but is known to not work!”. Hmmm, not sure what this means? I went off to the dbatools slack channel for advice and got the answer I needed.

dbatools was showing me this message because I recently tried to access this server and it knows I didn’t have permission, it remembered and wasn’t going to waste time trying again. But now I do have permission, so I do actually need it to forget and try again, to do that we use Set-DbaCmConnection.

Set-DbaCmConnection -ComputerName devbox1.domain.local -ResetCredential -ResetConnectionStatus -DisableBadCredentialCache

This will give us a fresh start with this server and cause dbatools to try and authenticate again, resulting in a good connection object. Also using the -DisableBadCredentialCache flag we can stop it from caching bad credentials while we debug our access issues.

Check out the Set-DbaCmConnection help page for further information.

- Gareth

Our team had some lofty goals and met a vast majority of them  . In the end, my personal goal for dbatools 1.0 was to have a tool that is not only useful and fun to use but trusted and stable as well. Mission accomplished: over the years, hundreds of thousands of people have used dbatools and dbatools is even recommended by Microsoft.

. In the end, my personal goal for dbatools 1.0 was to have a tool that is not only useful and fun to use but trusted and stable as well. Mission accomplished: over the years, hundreds of thousands of people have used dbatools and dbatools is even recommended by Microsoft.

Before we get started with what’s new, let’s take a look at some history.

historical milestones

dbatools began in July of 2014 when I was tasked with migrating a SQL Server instance that supported SharePoint. No way did I want to do that by hand! Since then, the module has grown into a full-fledged data platform solution.

- 07/2014 – Started

- 07/2014 – Published to GitHub & ScriptCenter

- 06/2016 – First major contributors

- 01/2017 – Road to 1.0 began

- 03/2018 – Switch from GPL to MIT

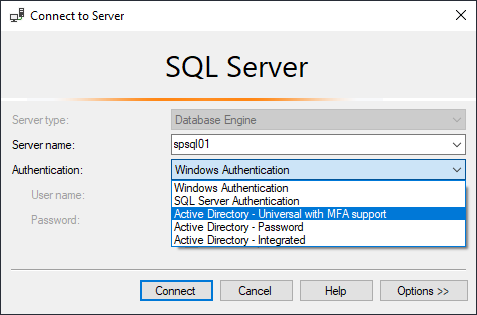

- 05/2019 – Added MFA Support

- 06/2019 – Over 160 contributors and 550 commands

Thanks so much to every single person who has volunteered any time to dbatools. You’ve helped change the SQL Server landscape.

improvements

We’ve made a ton of enhancements that we haven’t had time to share even over the past six months. Here are a few.

availability groups

Availability Group support has been solidified and is looking good and New-DbaAvailabilityGroup is better than ever. Try out the changes and let us know how you like them.

Get-Help New-DbaAvailabilityGroup -Examples

authentication support

We now also support all the different ways to login to SQL Server! So basically this:

Want to try it for yourself? Here are a few examples.

# AAD Integrated Auth

Connect-DbaInstance -SqlInstance psdbatools.database.windows.net -Database dbatools

# AAD Username and Pass

Connect-DbaInstance -SqlInstance psdbatools.database.windows.net -SqlCredential [email protected] -Database dbatools

# Managed Identity in Azure VM w/ older versions of .NET

Connect-DbaInstance -SqlInstance psdbatools.database.windows.net -Database abc -SqCredential appid -Tenant tenantguidorname

# Managed Identity in Azure VM w/ newer versions of .NET (way faster!)

Connect-DbaInstance -SqlInstance psdbatools.database.windows.net -Database abc -AuthenticationType 'AD Universal with MFA Support'

You can also find a couple more within the MFA Pull Request on GitHub and by using Get-Help Connect-DbaInstance -Examples.

registered servers

This is probably my favorite! We now support Local Server Groups and Azure Data Studio groups. Supporting Local Server Groups means that it’s now a whole lot easier to manage servers that don’t use Windows Authentication.

Here’s how you can add a local docker instance.

# First add it with your credentials

Connect-DbaInstance -SqlInstance 'dockersql1,14333' -SqlCredential sqladmin | Add-DbaRegServer -Name mydocker

# Then just use it for all of your other commands.

Get-DbaRegisteredServer -Name mydocker | Get-DbaDatabase

Totally dreamy

csv

Import-DbaCsv is now far more reliable. While the previous implementation was faster, it didn’t work a lot of the time. The new command should suit your needs well.

Get-ChildItem C:\allmycsvs | Import-DbaCsv -SqlInstance sql2017 -Database tempdb -AutoCreateTable

future & backwards compatible

In the past couple months, we’ve started focusing a bit more on Azure: both Azure SQL Database and Managed Instances. In particular, we now support migrations to Azure Managed Instances! We’ve also added a couple more commands to PowerShell Core., in particular, the Masking and Data Generation commands. Over 75% of our commands run on mac OS and Linux!

Still, we support PowerShell 3 and Windows 7 and SQL Server 2000 when we can. Our final testing routines included ensuring support for:

- Windows 7

- SQL Server 2000-2019

- User imports vs Developer imports

- mac OS / Linux

- x86 and x64

- Strict (

AllSigned) Execution Policy

new commands

We’ve also added a bunch of new commands, mostly revolving around Roles, PII, Masking, Data Generation and even ADS notebooks!

Want to see the full list? Check out our freshly updated Command Index page  .

.

configuration enhancements

A few configuration enhancements have been made and a blog post for our configuration system is long overdue. But one of the most useful, I think, is that you can now control the client name. This is the name that shows up in logs, in Profiler and in Xevents.

# Set it

Set-DbatoolsConfig -FullName sql.connection.clientname -Value "my custom module built on top of dbatools" -Register

# Double check it

Get-DbatoolsConfig -FullName sql.connection.clientname | Select Value, Description

The -Register parameter is basically a shortcut for piping to Register-DbatoolsConfig. This writes the value to the registry, otherwise, it’ll be effective only for your current session.

Another configuration enhancement helps with standardization. Now, all export commands will default to Documents\DbatoolsExport. You can change it by issuing the following commands.

# Set it

Set-DbatoolsConfig -FullName path.dbatoolsexport -Value "C:\temp\exports" -Register

# Double check it

Get-DbatoolsConfig -FullName path.dbatoolsexport | Select Value, Description

help is separated

Something new that I like because it’s “proper” PowerShell: we’re now publishing our module with Help separated into its own file. We’re using a super cool module called HelpOut. HelpOut was created for dbatools by a former member of the PowerShell team, James Brundage.

HelpOut allows our developers to keep writing Help within the functions themselves, then separates the Help into dbatools-help.xml and the commands into allcommands.ps1, which helps with faster loading. Here’s how we do it:

Install-Maml -FunctionRoot functions, internal\functions -Module dbatools -Compact -NoVersion

It’s as simple as that! This does all of the heavy lifting: making the maml file and placing it in the proper location, and parsing the functions for allcommands.ps1!

Help will continue to be published to docs.dbatools.io and updated with each release. You can read more about HelpOut on GitHub.

breaking changes

We’ve got a number of breaking changes included in 1.0.

Before diving into this section, I want to emphasize that we have a command to handle a large majority of the renames! Invoke-DbatoolsRenameHelper will parse your scripts and replace script names and some parameters for you.

command renames

Renames in the past 30 days were mostly changing Instance to Server. But we also made some command names more accurate:

Test-DbaDbVirtualLogFile -> Measure-DbaDbVirtualLogFile

Uninstall-DbaWatchUpdate -> Uninstall-DbatoolsWatchUpdate

Watch-DbaUpdate -> Watch-DbatoolsUpdate

command removal

Export-DbaAvailabilityGroup has been removed entirely. The same functionality can now be found using Get-DbaAvailabiltyGroup | Export-DbaScript.

alias removals

All but 5 command aliases have been removed. Here are the ones that are still around:

Get-DbaRegisteredServer -> Get-DbaRegServer

Attach-DbaDatabase -> Mount-DbaDatabsae

Detach-DbaDatabase – Dismount-DbaDatabase

Start-SqlMigration -> Start-DbaMigration

Write-DbaDataTable -> Write-DbaDbTableData

I kept Start-SqlMigration because that’s where it all started, and the rest are easier to remember.

Also, all ServerInstance and SqlServer aliases have been removed. You must now use SqlInstance. For a full list of what Invoke-DbatoolsRenameHelper renames/replaces, check out the source code.

parameter standardization

Most of the commands now follow the following practices we’ve observed in Microsoft’s PowerShell modules.

- Piped input is

-InputObjectand not DatabaseCollection or LoginCollection, etc. - Directory (and some file) paths are now

-Pathand not BackupLocation or FileLocation - When a distinction is required, file paths are now

-FilePath, and not RemoteFile or BackupFileName - If both file and directory path needs to be distinguished, Path is used for directory and FilePath for file locations

parameter removal

-SyncOnly is no longer an option in Copy-DbaLogin. Please use Sync-DbaLoginPermission instead.

-CheckForSql is no longer an option in Get-DbaDiskSpace. Perhaps the functionality can be made into a new command which can be piped into Get-DbaDiskSpace but the implementation we had was  .

.

For a full list of breaking changes, you can browse our gorgeous changelog, maintained by Andy Levy.

book party!

In case you did not hear the news, Rob Sewell and I, are currently in the process of writing dbatools in a Months of Lunches! We’ve really excited and hope to have a MEAP (Manning Early Access Program) available sometime in July. We will keep everyone updated here and on Twitter.

The above is what the editor looks like – a lot like markdown!

If you’d like to see what the writing process is like, I did a livestream a couple of months back while writing Chapter 6, which is about Find-DbaInstance. Sorry about the music being a bit loud, that has been fixed in future streams which can be found at youtube.com/dbatools.

sponsorship

Since Microsoft acquired GitHub, they’ve been rolling out some really incredible features. One such feature is Developer Sponsorships, which allows you to sponsor developers with cash subscriptions. It’s sorta like Patreon where you can pay monthly sponsorships with different tiers. If you or your company has benefitted from dbatools, consider sponsoring one or more of our developers.

Currently, GitHub has approved four of our team members to be sponsored including me, Shawn Melton, Stuart Moore and Sander Stad.

We’ve invited other dbatools developers to sign up as well

Oh, and for the first year, GitHub will match sponsorship funds! So giving to us now is like giving double.

big ol thanks

I’d like to give an extra special thanks to the contributors who helped get dbatools across the finish line these past couple months: Simone Bizzotto, Joshua Corrick, Patrick Flynn, Sander Stad, Cláudio Silva, Shawn Melton, Garry Bargsley, Andy Levy, George Palacios, Friedrich Weinmann, Jess Pomfret, Gareth N, Ben Miller, Shawn Tunney, Stuart Moore, Mike Petrak, Bob Pusateri, Brian Scholer, John G “Shoe” Hohengarten, Kirill Kravtsov, James Brundage, Hüseyin Demir, Gianluca Sartori and Rob Sewell.

Without you all, 1.0 would be delayed for another 5 years.

blog party!

Want to know more about dbatools? Check out some of these posts

dbatools 1.0 – the tools to break down the barriers – Shane O’Neill

dbatools 1.0 is here and why you should care – Ben Miller

dbatools 1.0 and beyond – Joshua Corrick

dbatools 1.0 – Dusty R

Your DBA Toolbox Just Got a Refresh – dbatools v1.0 is Officially Available!!! – Garry Bargsley

dbatools v1.0? It’s available – Check it out!

updating sql server instances using dbatools 1.0 – Gareth N

livestreaming

We’re premiering dbatools 1.0 at DataGrillen in Lingen, Germany today and will be livestreaming on Twitch.

Thank you, everyone, for your support along the way. We all hope you enjoy dbatools 1.0

,

,

Chrissy

Welcome to a quick post that should help you operate your SQL Server environment more consistently and reduce manual, repetitive work.

The Problem

When you are running SQL Server Availability Groups, one of the most cumbersome tasks is to ensure that all logins are synchronised between all replicas. While not exactly rocket science, it is something that quickly means a lot of work if you are managing more than one or two Availability Groups.

Wouldn’t it be nice to have a script that is flexible enough to

- be called by only specifying the Availability Group Listener

- detect all replicas and their roles automatically

- connect to the primary, read all SQL logins and apply them to EVERY secondary automatically?

Well, dbatools to the rescue again.

The solution

With dbatools, such a routine takes only a few lines of code.

The below script connects to the Availability Group Listener, queries it to get the current primary replica, as well as every secondary replica and then synchronizes all logins to each secondary.

In the template code, no changes are actually written due to the -WhatIf switch, so that you can safely test it to see what changes would be committed.

<#

Script : SyncLoginsToReplica.ps1

Author : Andreas Schubert (http://www.linkedin.com/in/schubertandreas)

Purpose: Sync logins between all replicas in an Availability Group automatically.

--------------------------------------------------------------------------------------------

The script will connect to the listener name of the Availability Group

and read all replica instances to determine the current primary replica and all secondaries.

It will then connect directly to the current primary, query all Logins and create them on each

secondary.

Attention:

The script is provided so that no action is actually executed against the secondaries (switch -WhatIf).

Change that line according to your logic, you might want to exclude other logins or decide to not drop

any existing ones.

--------------------------------------------------------------------------------------------

Usage: Save the script in your file system, change the name of the AG Listener (AGListenerName in this template)

and schedule it to run at your prefered schedule. I usually sync logins once per hour, although

on more volatile environments it may run as often as every minute

#>

# define the AG name

$AvailabilityGroupName = 'AGListenerName'

# internal variables

$ClientName = 'AG Login Sync helper'

$primaryInstance = $null

$secondaryInstances = @{}

try {

# connect to the AG listener, get the name of the primary and all secondaries

$replicas = Get-DbaAgReplica -SqlInstance $AvailabilityGroupName

$primaryInstance = $replicas | Where Role -eq Primary | select -ExpandProperty name

$secondaryInstances = $replicas | Where Role -ne Primary | select -ExpandProperty name

# create a connection object to the primary

$primaryInstanceConnection = Connect-DbaInstance $primaryInstance -ClientName $ClientName

# loop through each secondary replica and sync the logins

$secondaryInstances | ForEach-Object {

$secondaryInstanceConnection = Connect-DbaInstance $_ -ClientName $ClientName

Copy-DbaLogin -Source $primaryInstanceConnection -Destination $secondaryInstanceConnection -ExcludeSystemLogins -WhatIf

}

}

catch {

$msg = $_.Exception.Message

Write-Error "Error while syncing logins for Availability Group '$($AvailabilityGroupName): $msg'"

}

To make tools reusable, you could easily turn this script into a function by adding the 2 variables as parameters. Then you could call it from any other script like

SyncLoginsToReplica.ps1 -AvailabilityGroupName YourAGListenerName -ClientName "Client"

For simplicity, I created this as a standalone script though.

I hope you find this post useful. For questions and remarks please feel free to message me!

]]>